The current artificial intelligence (AI) boom feels like a recent phenomenon, but it is much older. John McCarthy first introduced the concept of “Artificial Intelligence” to the lexicon back in 1955. Soon after (1959), Arthur Samuel at IBM coined the term “machine learning.” These words were adjacent to numerous achievements in each space, from robots that self-navigated a cluttered room (1979) to face recognition software (1993). It wasn’t until the rise of GPT and its notable achievements, such as passing the Bar Exam (with a top 10% score, although heavily disputed at much lower; see Sullivan, 2024), that technology took an all-encompassing, FOMO-induced look at AI as a whole.

Is AI real intelligence? No, it is not. Numerous studies demonstrate this; Apple recently released a comprehensive paper to show that AI creates an illusion of thinking. Let me be clear: AI’s ability to mimic human behavior has granted it a license to participate in many arenas. But like an actor playing a part, it is nowhere near a capable replacement for the real deal. So, why all the fuss?

Where AI is Useful

AI thrives on entropy, with a keen ability to extract information from vast data clouds or lakes. Several times, in various ways, I’ve heard the story of a team that sets out to organize data, spending months and years on the project. They spend too much time debating how to structure the databases or how to craft routes for accessing the data. They often fail or deliver late. While AI can extract insights from unstructured data, it is not a replacement for robust data infrastructure — and AI’s own outputs rely on that infrastructure.

AI feels magic because you can ask it to give you baseball box scores or to explain quantum physics at work in the center of the Sun using the same input field. The system the team above built only provides data on a single aspect. AI has the power to answer questions about almost anything reasonably. However, it’s not always correct. But hey, neither are people.

Another key to human acceptance is how well Large Language Models (LLMs) can mimic human behavior. There is a difference between AI and LLMs because the former is a version of the latter. An LLM uses extensive pattern matching to read, process, and generate responses that feel authentic.

The Corporate Appetite for AI

In mid-2025, we are in the midst of a corporate race to find AI success. Many company leaders who only see the magic are tasking their teams to find ways to use AI to help generate more revenue for their company. Corporate AI adoption could involve its use in augmenting creative ideas or, at worst, replacing entire teams of people. But an interesting statistic from S&P Global Market Intelligence (as reported by Industry Dive) shows a drastic increase in all-AI-initiatives failures in early 2025, from 17% in 2024 to 42% in early 2025. Meaning a company gave up on most new AI technology.

Now, you can’t make an omelet without cracking a few eggs, but we now witness a significant shift in large corporations giving up on AI. Why? Well, cost, data privacy, and security risks all reign high as reasons for the surveyed companies. These reasons all make sense, so let’s examine each one in turn.

Cost.

True AI power is expensive. Some of the incredible videos coming out of Google’s Veo 3 platform can spark genuine emotion, but they are costly to access. To gain access to Google’s AI Ultra subscription, you are shelling out $249.99 a month, way more than a subscription to an extended Adobe suite. Independent users may struggle to spend as much as they do on groceries in a month on an AI subscription. The subscription grants you tokens for use each month, so you can quickly run out if you are outputting 4K video.

This cost increases at scale for a corporation. It also increases inside of products. Slack (June 2025) costs $15 per user annually, but their AI upgrade is another $10 per user, a 66% cost increase. Slack deploys a “buy-it-for-everybody or nobody” model, which can be a significant cost for both small and large companies.

Data Privacy.

The internet is often referred to as the cloud because it’s too complex to diagram. But AI is another black box. You give it data, it gives you data. Only the most privileged or intelligent people can tell you what is going on inside that box. There is often a lack of transparency in most AI systems, making it difficult for you to see what happens to your data. Many models are “learning models” that retain data to serve you better.

Knowledge and data are power, and when there is a risk of AI taking it, corporations take notice. Google, one of the largest technology companies, recently paid out a $93 million reward (Smalley, 2023) after a user discovered that the company continued to collect location data even after the user had turned off the feature. More lawsuits (Allyn, 2024) continue to result in settlements, and one could surmise that Google would rather pay out rewards than play the game honestly. How can one assume an AI company will be the same?

Security Risks.

In my interactions with AI prompt engineers, I’ve seen several examples of humans getting AI to break its own rules. Corporations can define security parameters, but with a system designed with numerous routes and possibilities, it becomes challenging to secure it properly. A skilled prompt engineer can trick AI into breaking its predefined rules. And AI with stricter, less breakable rules becomes less powerful and less worth it to deploy.

AI At a Stretch, In a Vaccum

One only needs to review some of the larger AI blunders over the years to give any leader pause before adopting AI. McDonald’s spent three years developing an AI-driven ordering system for its drive-thrus before shuttering it in 2024. The reason? It kept malfunctioning, and social media was particularly harsh about it. One example involved two people managing to place 260 orders of chicken nuggets while pleading for it to stop.

The Chicago Sun-Times published an AI-generated Summer reading list with fake books, iTutor Group’s AI system discriminated against older applicants to its tutor program, and Amazon’s early AI screener was famously biased against female job applicants. iTutor paid out more than a quarter of a million dollars to settle its lawsuit. The company attempted to claim that it wasn’t responsible for its AI, but the courts disagreed.

New York City unveiled an AI assistant last year to help citizens start and run businesses in the city. It wasn’t long until reports came out of it advising people to steal workers’ tips, fire people who complained of sexual harassment, and serve food previously grazed upon by rats.

The Corporate Need for Profits: The Shiny AI

Corporations have long used layoffs as a means to increase the bottom line. If you watch Wall Street, company layoffs often correlate with stock price increases. Investors view these moves as a company not afraid to reduce human capital in pursuit of the almighty dollar. While exceptions are standard, there is a reason why CNN publishes a fear and greed index for the market.

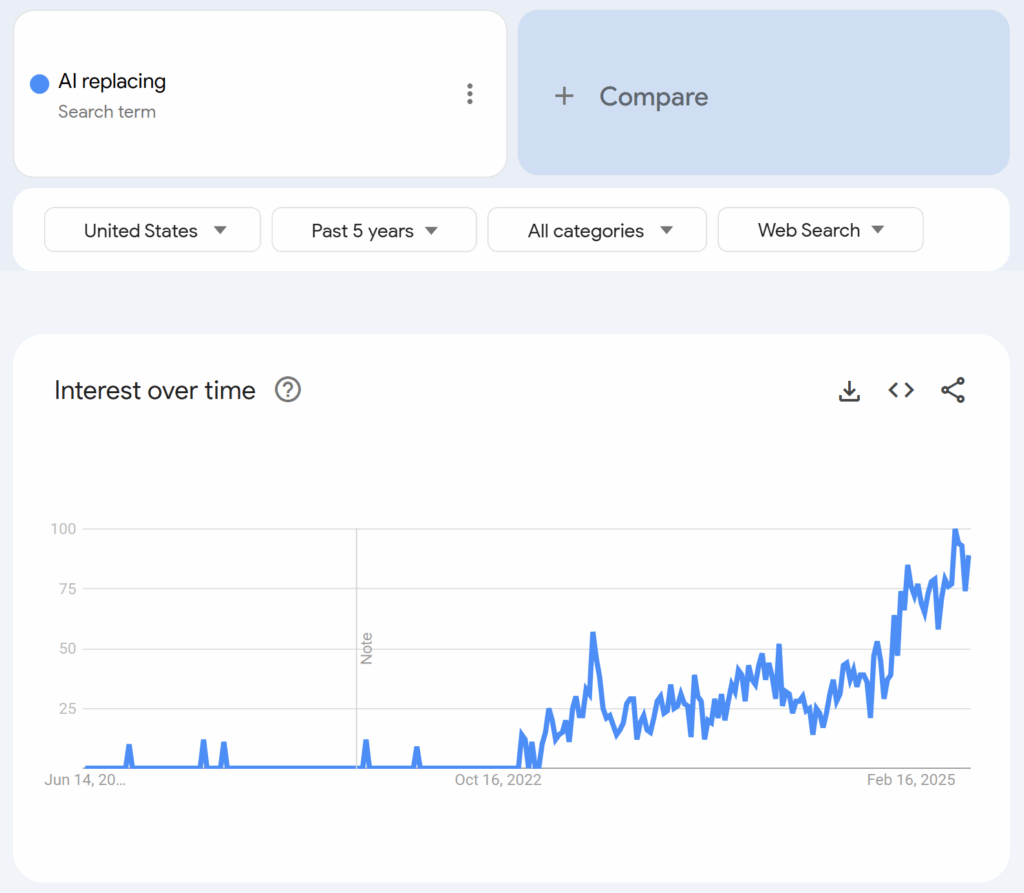

Many have discussed the correlation between AI and the replacement of human capital for years. Still, a noticeable trend has emerged over the past three years, where people are increasingly using Google to search for information about the topic. As sensational headlines capture any CEO’s quote on the subject, there are bold statements in the news predicting that an AI engine will replace large chunks of the human workforce.

AI has not often replaced workers but rather displaced jobs. Most displaced jobs are monotonous. Take the automobile factory worker. You’ll see highly precise robots on the assembly line moving heavy pieces of metal and welding them with precision. The welders are gone here, but now there is an entire company that builds and repairs the robots and more to run them. The bots don’t come cheap, and while robots and software have displaced jobs, the company is probably paying more money out of pocket but gains production speed and robotic precision.

White Collar AI Replacement

One recent development is around the replacement of people in white-collar jobs. There is merit to these, as some of the jobs are as boring as they come, consisting of a human entering data into a computer for eight hours a day. However, those issues were solvable long before the advent of AI, using optical character recognition (OCR) and basic software. If anything, introducing an AI platform into the mix runs the risk of sloppy data capture or incorrect data. Yet, AI can function by dipping into a data lake, so that leaves only the inherent risks of misinformation and security breaches.

AI bias is another issue that companies struggle to address. Most AI systems aim to please, and a recent release of GPT (that they quickly rolled back) saw this aspect turned up to a very high level. It reached a point where the AI was advising people with really unsound ideas to execute them. Because AI wants to please the users. This level of AI butt-kissing would undoubtedly drive some white-collar workers to push initiatives in a corporation that result in either failures or cause the company to perform unethical operative decisions that land them in court.

If you think people are tough to manage, AI is a whole new ballgame. You can’t drag the AI into court, and you can’t punish it. An entire system of punishment and reward exists in corporate America, which starts in public and private school education.

Why AI Could Be a Bubble

The interest in artificial development continues to peak, but the abandonment of corporate initiatives has sharply risen. This trend is a manifestation of the fear of missing out (FOMO) that AI experiences at the corporate level. As workers attempt to implement AI commands from above, they are uncovering the real challenges of cost, privacy, and security.

Senior and executive management envisions a world where intelligent machines are affordable and cost very little. They often view AI as a one-to-one replacement for human capital and also see it as a tool to enhance their workers. However, leaders like Andy Jassy at Amazon are directly predicting that AI will reduce their workforce. Jassy’s comments will greatly appeal to stockholders, but the actual savings will be little to none. Amazon has spent hundreds of billions on developing AI, so it must utilize it for something, right?

Humans are terrible at predicting the future—even myself. Nobody saw AI’s recent rise coming. Take the last presidential election. For all the people who knew how it would turn out, I doubt anyone predicted the events as they have unfolded from the pre-election period to today. We can only use the data we have from the past as the arrow of time continues to march forward.

One thing seems sure: AI hype is giving way to hard-earned pragmatism.

References

- Slack, Pricing, Retrieved from: https://web.archive.org/web/20250625222143/https://slack.com/pricing

- Shojaee, P., Mirzadeh, I., Alizadeh, K., Horton, M., Bengio, S., & Farajtabar, M. (2025). The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity. Apple, Retrieved from: https://ml-site.cdn-apple.com/papers/the-illusion-of-thinking.pdf

- Wilkinson, Lindsey, (2025). AI project failure rates are on the rise: report, CIO Dive, Retrieved from: https://web.archive.org/web/20250613212729/https://www.ciodive.com/news/AI-project-fail-data-SPGlobal/742590/

- Palmer, A., (2025), Amazon plans to spend $100 billion this year to capture ‘once in a lifetime opportunity’ in AI, CNBC, Retrieved from: https://web.archive.org/web/20250618070655/https://www.cnbc.com/2025/02/06/amazon-expects-to-spend-100-billion-on-capital-expenditures-in-2025.html

- Allyn, B., (2024), Google to delete search data of millions who used ‘incognito’ mode, npr, Retrieved from: https://web.archive.org/web/20250618141059/https://www.npr.org/2024/04/01/1242019127/google-incognito-mode-settlement-search-history

- Sullivan, M, (2024), Did OpenAI’s GPT-4 really pass the bar exam?, Fast Company, Retrieved from: https://web.archive.org/web/20240610200814/https://www.fastcompany.com/91073277/did-openais-gpt-4-really-pass-the-bar-exam

- People of the State of California v. Google, LLC, No. 23CV422424, (Cal. Super. Ct. Santa Clara Cnty. 2023). Retrieved from: https://web.archive.org/web/20250512152518/https://s3.documentcloud.org/documents/23978742/filed-stamped-google-complaint.pdf

- Smalley, S, (2023), Google to pay California $93 million for allegedly lying to users about location data practices, The Record from Recorded Future News, Retrieved from: https://web.archive.org/web/20250515092347/https://therecord.media/google-settles-for-lying-geolocation

- (2022) EEOC Sues iTutorGroup for Age Discrimination, EEOC, Retrieved from: https://web.archive.org/web/20250614000347/https://www.eeoc.gov/newsroom/eeoc-sues-itutorgroup-age-discrimination

- Hochreutiner, C., (2019), The History of Facial Recognition Technologies: How Image Recognition Got So Advanced, AnyConnect Academy, Retrieved from: https://web.archive.org/web/20250315173058/https://anyconnect.com/blog/the-history-of-facial-recognition-technologies

- Iriondo, R., (2018), Amazon Scraps Secret AI Recruiting Engine that Showed Biases Against Women, CMU, Retreived from: https://web.archive.org/web/20250415144250/https://www.ml.cmu.edu/news/news-archive/2016-2020/2018/october/amazon-scraps-secret-artificial-intelligence-recruiting-engine-that-showed-biases-against-women.html

- (1988), Stanford Cart, The Computer Museum, Retrieved from: https://web.archive.org/web/20181004164437/http://www.computerhistory.org/collections/catalog/102723520

#############

Disclosure: The author wrote this article using AI-assisted tools, AI research, and AI-generated illustrations. All content was written by and reviewed by the author.